How to run a cost-efficient ClickHouse cluster with separated storage & compute

You’ve got data. Lots of it. And you want to query it. ClickHouse is a great choice, but it can be expensive to run, especially in its default configuration.

By default, ClickHouse writes data to the local disk, which, in most cloud environments, is expensive block storage. In order to manage cost, it makes sense to separate storage from compute and shift data to cheaper object storage. This reduces costs and unlocks elasticity (you no longer have to add disks as your data grows). But object storage exhibits high-latency making it impractical to serve low-latency queries from directly.

Fortunately, we can configure ClickHouse to use a hybrid approach with durable object storage and ephemeral local storage. This achieves a better price/performance ratio. Read along for expected savings and a blueprint on how to achieve this.

Durable block storage is fast but expensive

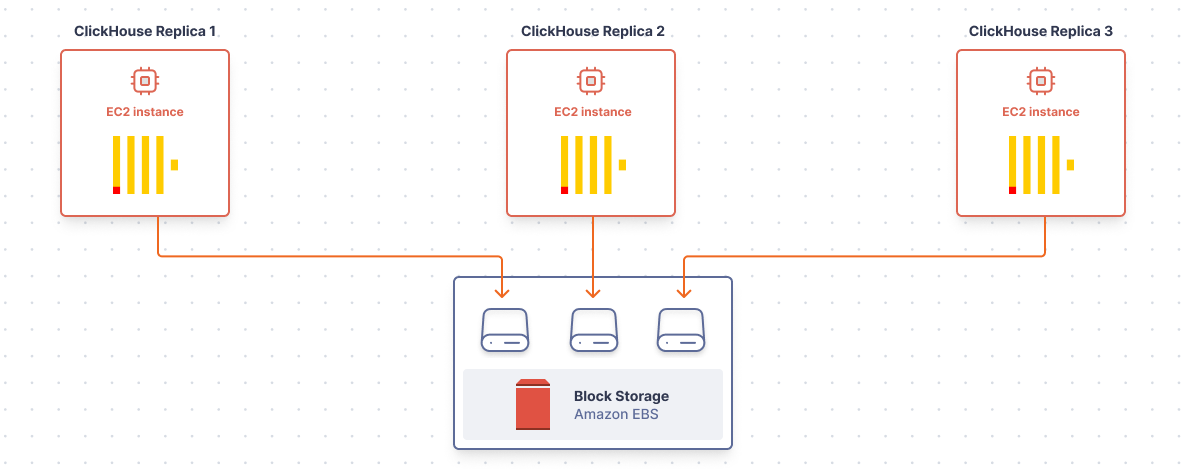

All the major cloud providers (AWS, GCE and Azure) offer durable block storage. For example, AWS’s offering, Elastic Block Storage (EBS), allows you to connect persistent disks over the network to your EC2 instances. These products offer both performance and durability. For performance, we can get more by paying for more IOPS and throughput. And durability means that, if our EC2 instance fails, the EBS volume still exists and can be attached to a new instance without data loss. For databases like ClickHouse, that mixture of performance and durability is critical, because (1) we want to answer queries quickly, and (2) once we’ve stored data, we never want to lose it.

As it turns out, this mixture of features is expensive. For example, assume you have a single ClickHouse server with 1 TiB of storage. Choosing a gp3 volume with 10,000 IOPs and 1,000 MiB / s of throughput will cost $151.92 / month:

This is the storage cost for a single disk on a single ClickHouse server. For high-availability, you’d want to run 3 servers, and so this price grows to $455.76 / month in a 3-node cluster.

Compute costs

You will also pay for compute for each server (ideally lots of RAM). For example, in AWS, the R5b memory optimized instance types have plenty of RAM choices and are EBS optimized. An r5b.xlarge with 4 vCPUs and 32 GiB of RAM goes for $0.2980 / hour on demand. So, assuming 3 replicas, our compute costs come out to $652.62 / month:

Of course, there are reserve commits you can make with AWS to lower this price, but this is a useful baseline. Adding our compute costs to our storage costs yields a total cost of $1,112.38 / month for a 3-node cluster:

Cost of compute dominates, but storage is still 41% of the bill. And this is for 1 TiB of storage! If you have more than 1 TiB of data, storing it in EBS — especially if not all of it is actively queried — is too expensive. Can we reduce our total cost?

Durable object storage is cheap but high-latency

ClickHouse also supports storing data in object storage like Amazon S3 (or GCS or Azure Blob Storage). S3 is durable and cheap. For example, 1 TiB in S3 is just $23.55 / month.

Moving from EBS to S3 would save $361.56 / month, representing an 84% decrease in storage costs; but, if you always have to fetch from object storage, your queries will experience high latency. We can reduce our total cost, but at the sake of performance. Not good for the database known for “not pressing the break pedal” (”не тормозит”).

Ephemeral local storage is faster and “free”

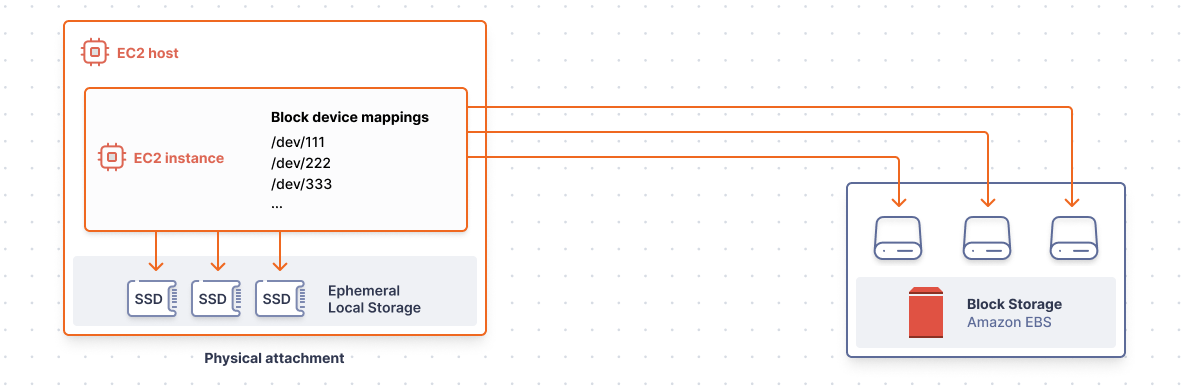

In addition to durable block and object storage, all the major cloud providers (AWS, GCE, and Azure) also offer ephemeral local storage. AWS calls these “instance store volumes”, and they are disks that are physically attached to the host computer. Depending on the EC2 instance type, the disks may be NVMe SSDs and perform faster than their EBS counterparts (remember, EBS volumes are attached over the network, while the instance store volumes are physically attached).

Even better, instance store volumes are “free”! (They are included in the EC2 instance price).

Compute costs

Compare an r5b.xlarge to an r7gd.xlarge. Both sport 4 vCPUs and 32 GiB of RAM, but the r7gd.xlarge is cheaper and it includes 237 GiB of ephemeral local NVMe SSD storage:

Of course, the elephant in the room is that these disks are ephemeral: they don’t persist across EC2 instance restarts. That means any data written to these disks will be lost on restart. Sounds dangerous, but the savings are too good to ignore. How can we make this safe? 🤔

Best of both worlds

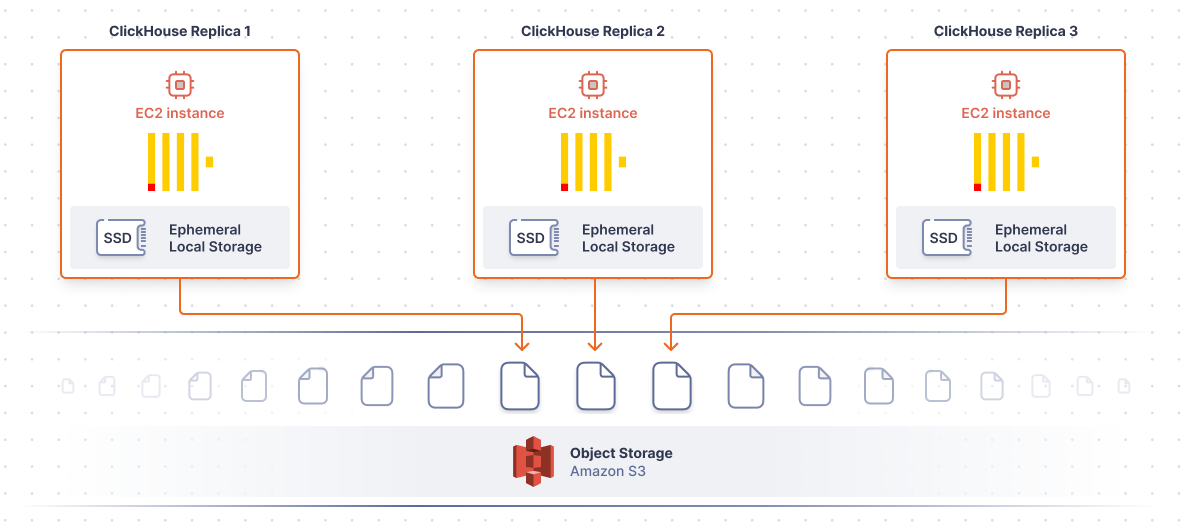

Fortunately, ClickHouse allows us to combine

- ephemeral local storage: fast and “free”

- durable object storage: cheap but high-latency

to get the best of both worlds: cheap, durable storage supporting low-latency queries.

The trick is to use S3 for durability and the ephemeral local storage (fast NVMe SSDs) as a cache. This hybrid approach gives limitless storage in S3 (great for storing historical data) while keeping actively queried records “warm” in the ephemeral local storage.

To achieve this, we must pay for compute that includes ephemeral local storage. Choosing r7gd.xlarge gives us access to 237 GiB and is almost $57 / month cheaper than r5b.xlarge (an almost 9% decrease in compute costs):

With this configuration, the total monthly cost is down from $1,112.38 to $666.78, representing a 40% decrease. More importantly, we’re no longer limited by EBS volume sizes. We can store a near infinite amount of historical data in S3, and cache the working set in local storage:

With this approach, you could reinvest the savings from EBS volumes to larger instance sizes. It’s worth noting that, as you move from r7gd.xlarge to r7gd.2xlarge and beyond, not only do you get more vCPUs and more RAM, you also get more ephemeral local storage, all of which improves performance.

Controlling S3 API costs

There’s one thing we haven’t accounted for yet, and that’s S3 API costs. ClickHouse will need to read objects from object storage to cache in ephemeral local storage. Amazon prices those reads at $0.0004 per thousand GET requests. In our experience, read costs are negligible, since most queries will hit the cache, but you need to pay attention to writes.

Every write to ClickHouse generates a part which will be written to object storage. For S3, that means a PUT request, which Amazon prices those at $0.005 per thousand, an order of magnitude greater than reads. Additionally, ClickHouse merges parts to generate new, larger parts, and these new, larger parts get written to S3, too. All this means, if you have a write-heavy application, you may see a lot of charges for S3 PUT requests.

If this is a problem, you can mitigate it in a few ways:

- Disable merges on the object storage-backed disk in ClickHouse.

- Raise the

flush_interval_millisecondson system log tables like query_log, part_log, etc. - Introduce a small EBS volume (for example, 100 GiB) that ClickHouse will write to and merge on, before moving parts to S3.

Theory to practice

You can configure ClickHouse like we’ve describe above on the major cloud providers with ephemeral local storage and cheap, durable object storage. In our opinion, Kubernetes and clickhouse-operator are the most user-friendly way to set this up (and monitor it).

To do this, you will need to:

- Create a Kubernetes cluster: In AWS, you can use EKS.

- Install clickhouse-operator to the Kubernetes cluster: The clickhouse-operator enables you to install, configure, and manage ClickHouse in Kubernetes.

- Install local-volume-provisioner: The local-volume-provisioner exposes ephemeral local storage as PersistentVolumes in Kubernetes. You can follow this guide for setting up the local-volume-provisioner and StorageClass for ephemeral local NVMe SSDs in EKS.

- Create a NodeGroup with ephemeral local storage: In AWS, you can use eksctl to create a NodeGroup with an appropriate EC2 instance type. By setting bootstrap commands, you can mount the local NVMe SSDs at instance start time. The local-volume-provisioner will then expose these as PersistentVolumes in Kubernetes, tagged with the ephemeral StorageClass.

- Create an S3 bucket (or other object storage) for storing data: In AWS, you should ensure the bucket region matches your ClickHouse cluster region. You should also enable the S3 gateway endpoint for your VPC, if you haven’t already.

- Create a ClickHouseInstallation with the clickhouse-operator: Ensure you configure the ClickHouse Pods with VolumeClaims for ephemeral local storage. The ClickHouse config.xml should set object storage as the primary disk and use ephemeral local storage as a cache (see our sample below). In AWS, remember to assign your ClickHouse Pods’ ServiceAccount an IAM role with permission to access your S3 bucket.

- Test it out: You should be able to create a table, insert data to the table, and observe files written to object storage. Then, after querying the table, you should be able to observe cache entries written to ephemeral local storage. See “Testing it out” below.

Sample ClickHouse config.xml

Sections omitted to focus on storage configuration:

<clickhouse>

<storage_configuration>

<disks>

<s3>

<type>s3</type>

<endpoint>https://s3.${REGION}.amazonaws.com/${BUCKET}/</endpoint>

<use_environment_credentials>true</use_environment_credentials>

</s3>

<s3_cache>

<type>cache</type>

<disk>s3</disk>

<path>${LOCAL_STORAGE_MOUNT_PATH}</path>

<cache_on_write_operations>1</cache_on_write_operations>

<max_size>237Gi</max_size>

</s3_cache>

</disks>

<policies>

<default>

<volumes>

<s3>

<disk>s3_cache</disk>

</s3>

</volumes>

</default>

</policies>

</storage_configuration>

</clickhouse>

Testing it out

Assuming your default storage policy in ClickHouse uses S3, create a table:

CREATE TABLE test (value Int64) ENGINE = MergeTree ORDER BY value;

INSERT INTO test SELECT * FROM numbers(1000);

Next, observe objects written to S3:

$ aws s3 ls ${BUCKET} --recursive

2024-03-25 16:47:36 792648 db/aaa/appoxikggtqctexghzcstgvcuwhfy

2024-06-04 19:57:14 323641 db/aaa/bwikrtxsnshouwzernjqhnwwnvcsq

2024-09-26 23:08:55 5248 db/aaa/cbitpopwmqjsgtqhtvpfzxonbppjy

…Next, select data from your test table:

SELECT * FROM test LIMIT 10;

And observe cache entries written to ephemeral local storage:

$ find ${LOCAL_STORAGE_MOUNT_PATH} -type f

/var/lib/clickhouse-ephemeral/cache/aaa/aaa9dcf3a28ab612edbf10d0264adc47/0

/var/lib/clickhouse-ephemeral/cache/aaa/aaa0c8e1150c6704c383019bd1eec51b/0

/var/lib/clickhouse-ephemeral/cache/aaa/aaa77f73a27f62cc0429e7f804ca653f/0

…You can realize up to %40 in cost savings operating your ClickHouse cluster by separating storage and compute. This can be achieved by moving data to cheap, object storage and caching it in ephemeral local storage. Because object storage like S3 is unlimited compared to an EBS volume, this achieves storage elasticity as well, meaning you won’t have to add disks as your data grows). You may need to pay attention to S3 API costs, depending on your write patterns, but ClickHouse offers ways to reduce these, too.